Newline produces effective courses for aspiring lead developers

Explore wide variety of content to fit your specific needs

article

NEW RELEASE

Free

Opus 4.6: Whats New About it ?

Watch: Introducing Claude Opus 4.6 by Anthropic Claude Opus 4.6 introduces significant upgrades in task planning, autonomy, and accuracy. According to , the model now plans more carefully and stays on task longer than previous versions, reducing errors in complex workflows. Users report that it handles multi-step tasks with better consistency, avoiding the "chunk-skipping" issues seen in Opus 4.5 . For example, documentation parsing tasks that previously failed due to skipped syntax are now handled reliably. The Opus series has evolved rapidly in 2025:

article

NEW RELEASE

Free

lora fine-t Checklist: Ensure Stable Fine‑Tuning

A LoRA fine-tuning checklist ensures efficient model adaptation while maintaining stability. Below is a structured overview of critical steps, timeframes, and success criteria. 1. Dataset Preparation 2. Hyperparameter Tuning

article

NEW RELEASE

Free

LoRA Fine‑T vs QLoRA Fine‑T: Which Saves Memory?

Watch: QLoRA: Efficient Finetuning of Quantized LLMs Explained by Gabriel Mongaras The Comprehensive Overview section provides a structured comparison of LoRA and QLoRA, highlighting their trade-offs in memory savings, computational efficiency, and implementation complexity. For instance, QLoRA’s 4-bit quantization achieves up to 75% memory reduction, a concept explored in depth in the Quantization Impact on Memory Footprint section. As mentioned in the GPU Memory Usage Comparison section, LoRA reduces memory requirements by ~3x, while QLoRA achieves ~5-7x savings, though at the cost of increased quantization overhead. Developers considering implementation timelines should refer to the Implementation Steps for LoRA Fine-T and QLoRA Fine-T section, which outlines the technical challenges and setup durations for both methods. Fine-tuning large language models (LLMs) has become a cornerstone of modern AI development, enabling organizations to adapt pre-trained models to specific tasks without rebuilding them from scratch. As LLMs grow in scale-models like Llama-2 and Microsoft’s phi-2 now contain billions of parameters-training from scratch becomes computationally infeasible. Fine-tuning bridges this gap, allowing developers to retain a model’s foundational knowledge while tailoring its behavior to niche applications. For example, a healthcare startup might fine-tune a general-purpose LLM to understand medical jargon, improving diagnostic chatbots without requiring a custom-trained model from the ground up.

article

NEW RELEASE

Free

How to Understand LLM Meaning in AI

Watch: LLMs EXPLAINED in 60 seconds #ai by Shaw Talebi Understanding LLM (Large Language Model) is critical in AI because these models form the foundation of modern natural language processing. An LLM is a type of artificial intelligence trained on massive amounts of text data to recognize patterns, generate human-like text, and perform tasks like translation, summarization, and code writing. Unlike general AI, LLMs specialize in language tasks, making them essential tools for developers, researchers, and businesses. For structured learning, platforms like newline offer courses that break down complex AI concepts into practical, project-based tutorials. As mentioned in the Why Understanding LLM Meaning Matters section, mastering this concept opens opportunities across industries. For hands-on practice, newline’s AI Bootcamp offers guided projects and interactive demos to apply LLM concepts directly. By balancing theory with real-world examples, learners can bridge the gap between understanding LLMs and implementing them effectively. See the Hands-On Code Samples for LLM Evaluation section for practical applications of these models.

article

NEW RELEASE

Free

In-Context Learning vs Fine‑Tuning: Which Faster?

In the world of large language models (LLMs), in-context learning and fine-tuning are two distinct strategies for adapting models to new tasks. In-context learning leverages examples embedded directly in the input prompt to guide the model’s response, while fine-tuning involves retraining the model on a specialized dataset to adjust its internal parameters. Both approaches have strengths and trade-offs, and choosing between them depends on factors like time, resources, and task complexity. Below, we break down their key differences, performance trade-offs (see the Performance Trade-offs: Accuracy vs Latency section for more details on these metrics), and practical use cases to help you decide which method aligns with your goals.. In-context learning works by including a few examples (called few-shot examples ) directly in the input prompt. For instance, if you want a model to classify customer support queries, you might provide examples like: Input : "Customer: My account is locked. Bot: Please verify your identity..." The model uses these examples to infer the task, without altering its internal weights. This method is ideal for scenarios where you cannot retrain the model, such as using APIs like GPT-4, where users only control the prompt. See the Understanding In-Context Learning section for a deeper explanation of this approach. Fine-tuning , by contrast, involves training a pre-trained model on a custom dataset to adapt it to a specific task. For example, a medical diagnosis model might be fine-tuned on a dataset of patient records and expert annotations. This process modifies the model’s parameters, making it more accurate for the target task but requiring significant computational resources and time. For more details on fine-tuning workflows, refer to the Understanding Fine-Tuning section..

course

Bootcamp

AI bootcamp 2

This advanced AI Bootcamp teaches you to design, debug, and optimize full-stack AI systems that adapt over time. You will master byte-level models, advanced decoding, and RAG architectures that integrate text, images, tables, and structured data. You will learn multi-vector indexing, late interaction, and reinforcement learning techniques like DPO, PPO, and verifier-guided feedback. Through 50+ hands-on labs using Hugging Face, DSPy, LangChain, and OpenPipe, you will graduate able to architect, deploy, and evolve enterprise-grade AI pipelines with precision and scalability.

course

Pro

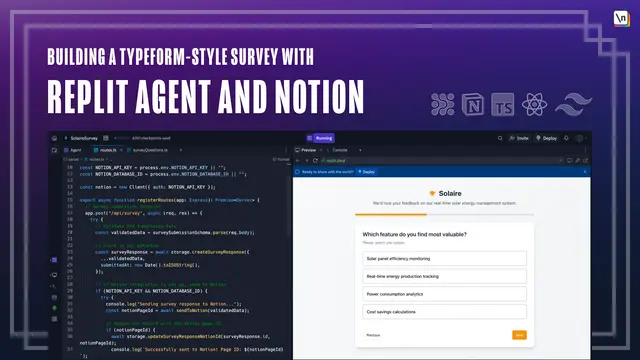

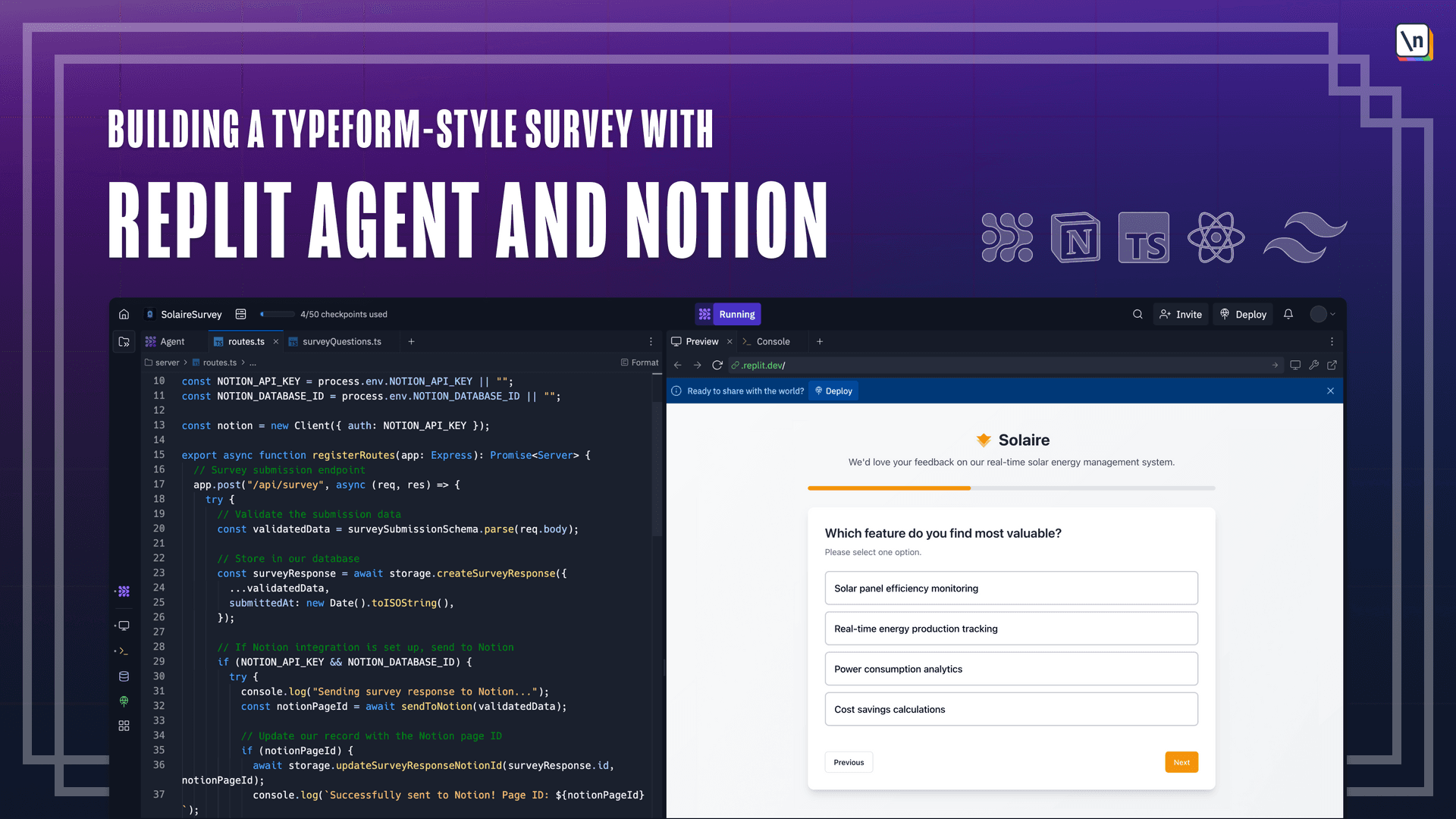

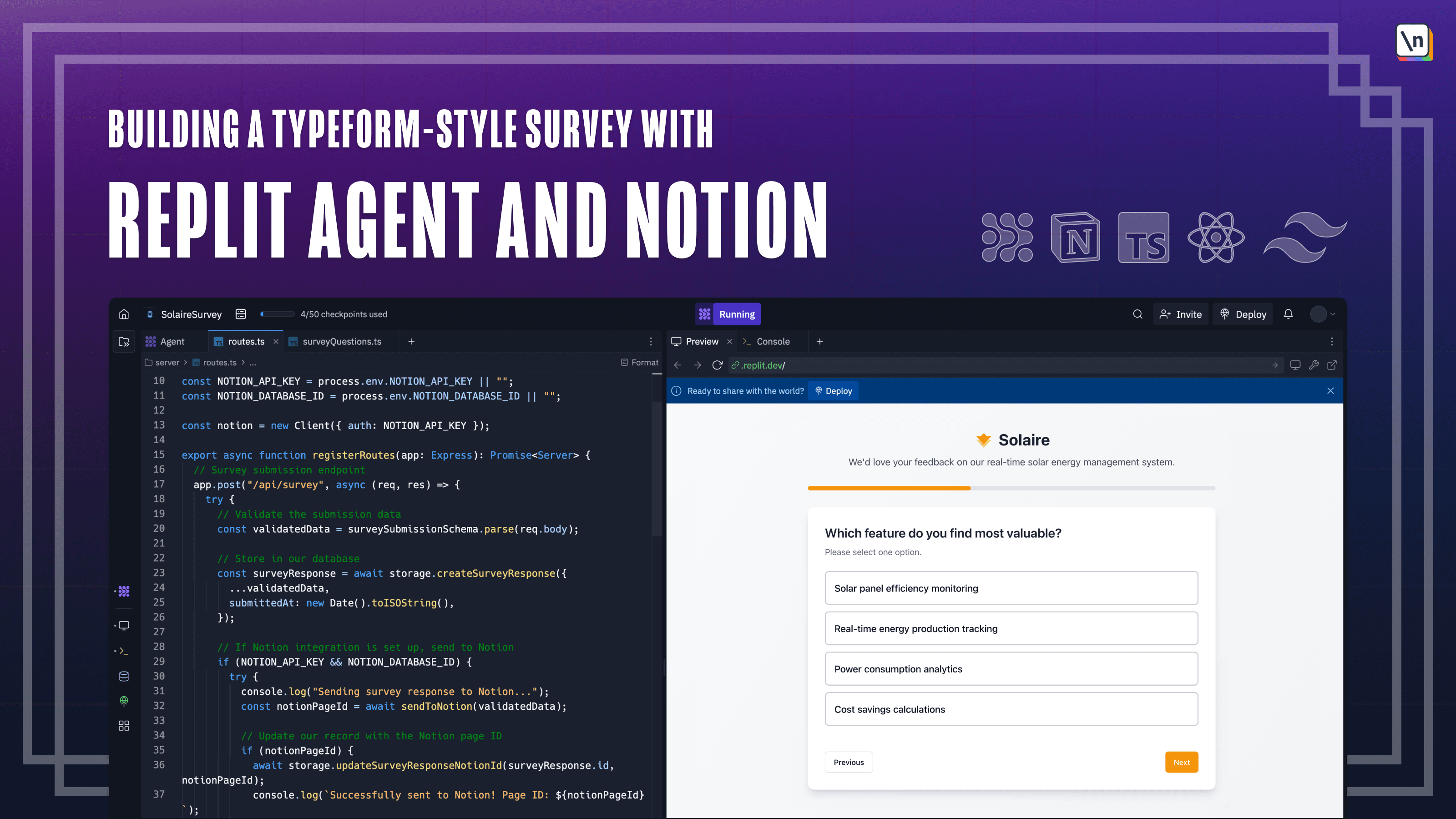

Building a Typeform-Style Survey with Replit Agent and Notion

Learn how to build beautiful, fully-functional web applications with Replit Agent, an advanced AI-coding agent. This course will guide you through the workflow of using Replit Agent to build a Typeform-style survey application with React and TypeScript. You will learn effective prompting techniques, explore and debug code that's generated by Replit Agent, and create a custom Notion integration for forwarding survey responses to a Notion database.

course

Pro

30-Minute Fullstack Masterplan

Create a masterplan that contains all the information you'll need to start building a beautiful and professional application for yourself or your clients. In just 30 minutes you'll know what features you'll need, which screens, how to navigate them, and even how your database tables should look like

course

Pro

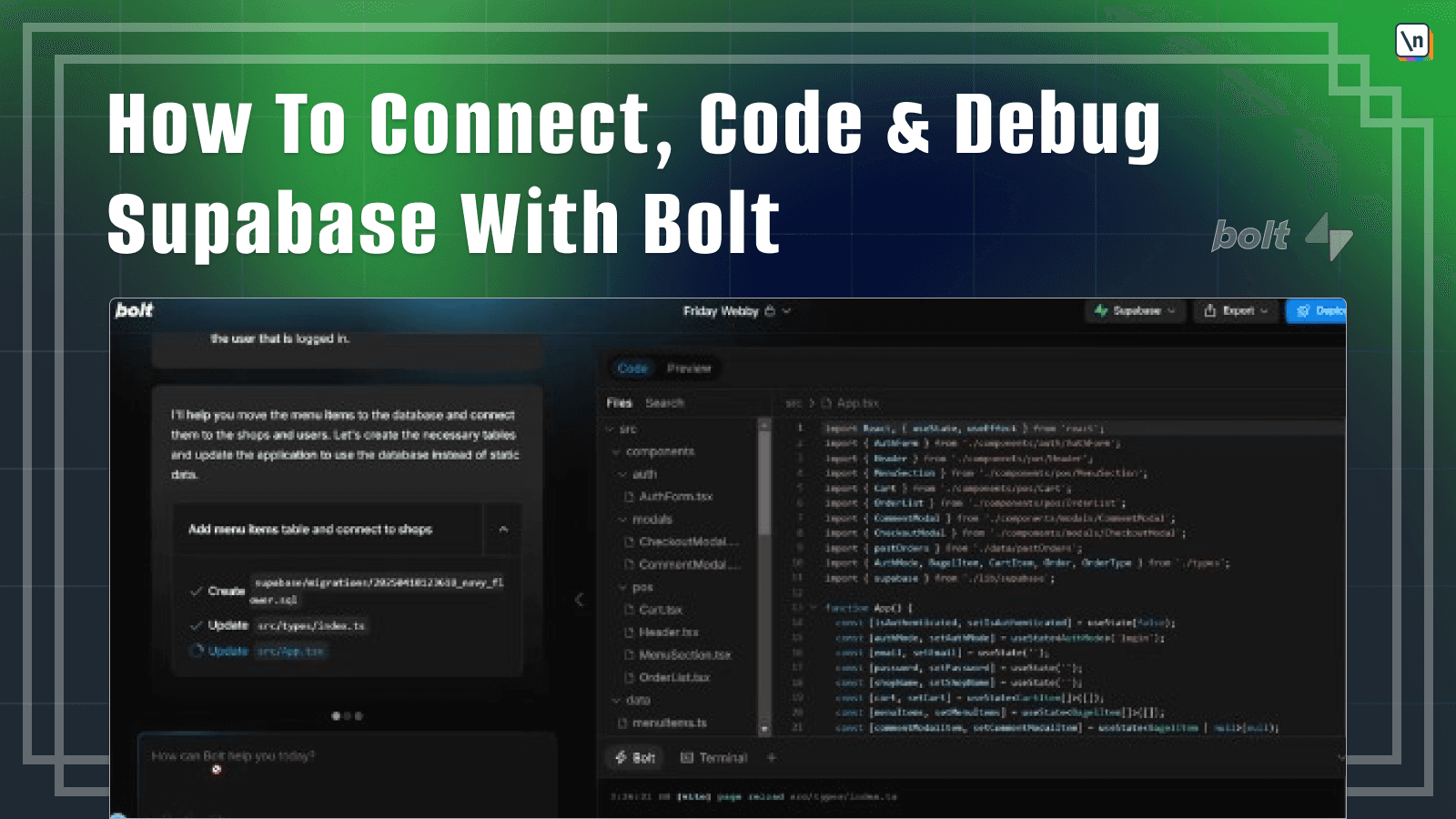

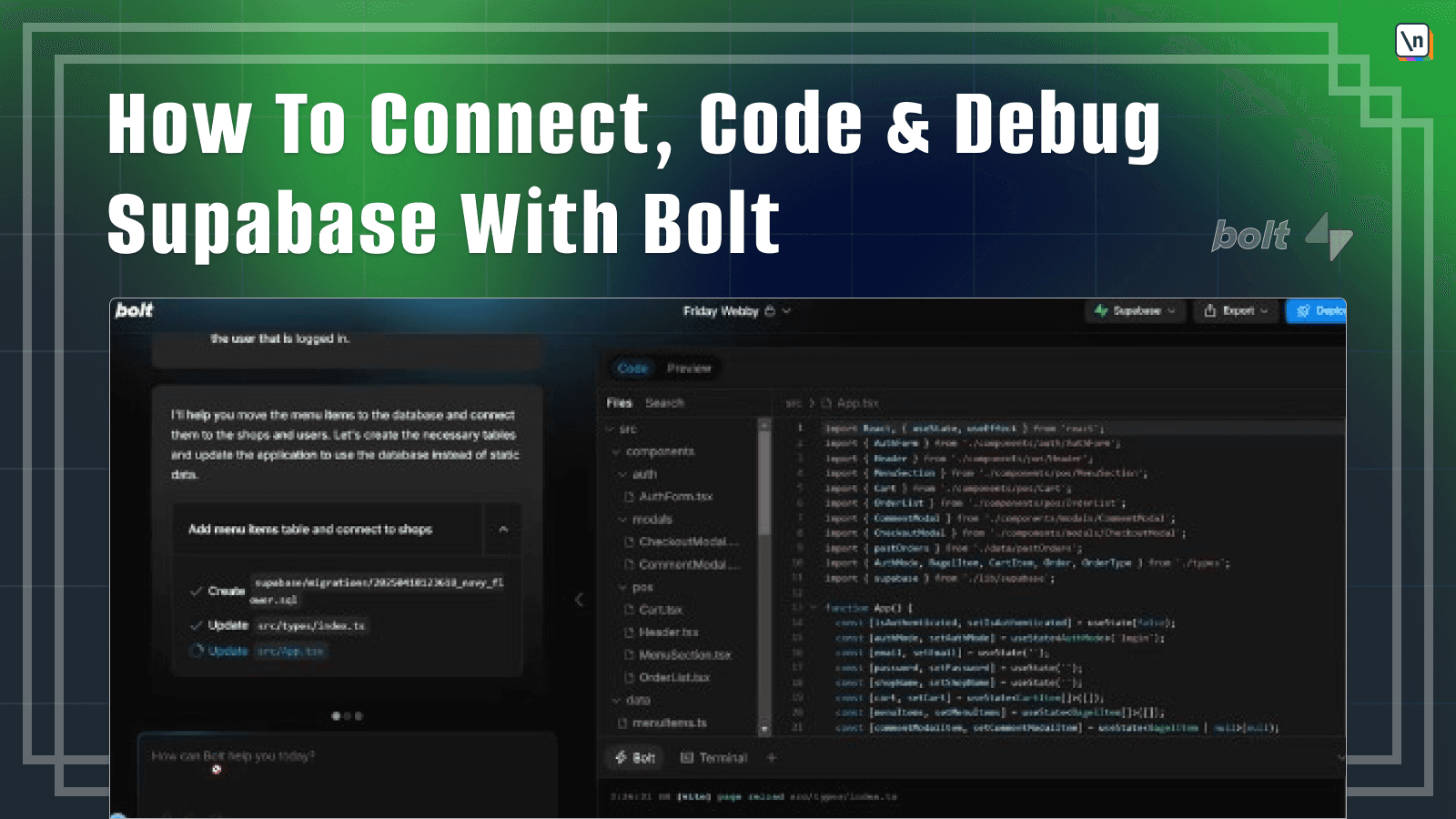

Lightspeed Deployments

Continuation of 'Overnight Fullastack Applications' & 'How To Connect, Code & Debug Supabase With Bolt' - This workshop recording will show you how to take an app and deploy it on the web in 3 different ways All 3 deployments will happen in only 30 minutes (10 minutes each) so you can go focus on what matters - the actual app

book

Pro

Fullstack React with TypeScript

Learn Pro Patterns for Hooks, Testing, Redux, SSR, and GraphQL

book

Pro

Security from Zero

Practical Security for Busy People

book

Pro

JavaScript Algorithms

Learn Data Structures and Algorithms in JavaScript

book

Pro

How to Become a Web Developer: A Field Guide

A Field Guide to Your New Career

book

Pro

Fullstack D3 and Data Visualization

The Complete Guide to Developing Data Visualizations with D3

EXPLORE RECENT TITLES BY NEWLINE

Expand your skills with in-depth, modern web development training

Our students work at

Stop living in tutorial hell

Binge-watching hundreds of clickbait-y tutorials on YouTube. Reading hundreds of low-effort blog posts. You're learning a lot, but you're also struggling to apply what you've learned to your work and projects. Worst of all, uncertainty looms over the next phase of your career.

How do I climb the career engineering ladder?

How do I continue moving toward technical excellence?

How do I move from entry-level developer to senior/lead developer?

Learn from senior engineers who've been in your position before.

Taught by senior engineers at companies like Google and Apple, newline courses are hyper-focused, project-based tutorials that teach students how to build production-grade, real- world applications with industry best practices!

newline courses cover popular libraries and frameworks like React, Vue, Angular, D3.js and more!

With over 500+ hours of video content across all newline courses, and new courses being released every month, you will always find yourself mastering a new library, framework or tool.

At the low cost of $40 per month, the newline Pro subscription gives you unlimited access to all newline courses and books, including early access to all future content. Go from zero to hero today! 🚀

Level up with the newline pro subscription

Ready to take your career to the next stage?

newline pro subscription

- Unlimited access to 60+ newline Books, Guides and Courses

- Interactive, Live Project Demos for every newline Book, Guide and Course

- Complete Project Source Code for every newline Book, Guide and Course

- 20% Discount on every newline Masterclass Course

- Discord Community Access

- Full Transcripts with Code Snippets

Explore newline courses

Explore our courses and find the one that fits your needs. We have a wide range of courses from beginner to advanced level.

Explore newline books

Explore our books and find the one that fits your needs.

Newline fits learning into any schedule

Your time is precious. Regardless of how busy your schedule is, newline authors produce high-quality content across multiple mediums to make learning a regular part of your life.

Have a long commute or trip without any reliable internet connection options?

Download one of the 15+ books. Available in PDF/EPUB/MOBI formats for accessibility on any device

Have time to sit down at your desk with a cup of tea?

Watch over 500+ hours of video content across all newline courses

Only have 30 minutes over a lunch break?

Explore 1-minute shorts and dive into 3-5 minute videos, each focusing on individual concepts for a compact learning experience.

In fact, you can customize your learning experience as you see fit in the newline student dashboard:

Building a Beeswarm Chart with Svelte and D3

Connor RothschildGo To Course →Hovering over elements behind a tooltip

Connor explains how setting the CSS property pointer-events to none allows users to hover over elements behind a tooltip in SVG data visualizations.

newline content is produced with editors

Providing practical programming insights & succinctly edited videos

All aimed at delivering a seamless learning experience

Find out why 100,000+ developers love newline

See what students have to say about newline books and courses

José Pablo Ortiz Lack

Full Stack Software Engineer at Pack & Pack

I got a job offer, thanks in a big part to your teaching. They sent a test as part of the interview process, and this was a huge help to implement my own Node server.

This has been a really good investment!

Meet the newline authors

newline authors possess a wealth of industry knowledge and an infinite passion for sharing their knowledge with others. newline authors explain complex concepts with practical, real-world examples to help students understand how to apply these concepts in their work and projects.

Level up with the newline pro subscription

Ready to take your career to the next stage?

newline pro subscription

- Unlimited access to 60+ newline Books, Guides and Courses

- Interactive, Live Project Demos for every newline Book, Guide and Course

- Complete Project Source Code for every newline Book, Guide and Course

- 20% Discount on every newline Masterclass Course

- Discord Community Access

- Full Transcripts with Code Snippets

LOOKING TO TURN YOUR EXPERTISE INTO EDUCATIONAL CONTENT?

At newline, we're always eager to collaborate with driven individuals like you, whether you come with years of industry experience, or you've been sharing your tech passion through YouTube, Codepens, or Medium articles.

We're here not just to host your course, but to foster your growth as a recognized and respected published instructor in the community. We'll help you articulate your thoughts clearly, provide valuable content feedback and suggestions, all towards publishing a course students will value.

At newline, you can focus on what matters most - sharing your expertise. We'll handle emails, marketing, and customer support for your course, so you can focus on creating amazing content

newline offers various platforms to engage with a diverse global audience, amplifying your voice and name in the community.

From outlining your first lesson to launching the complete course, we're with you every step of the way, guiding you through the course production process.

In just a few months, you could not only jumpstart numerous careers and generate a consistent passive income with your course, but also solidify your reputation as a respected instructor within the community.

Comments (3)