Lessons

view all ⭢lesson

Advanced RAGPower AI course- Intro to RAG and Why LLMs Need External Knowledge - LLM Limitations and How Retrieval Fixes Hallucinations - How RAG Combines Search + Generation Into One System - Fresh Data Retrieval to Overcome Frozen Training Cutoffs - Context Engineering for Giving LLMs the Right Evidence - Multi-Agent RAG and Routing Queries to the Right Tools - Retrieval Indexes: Vector DBs, APIs, SQL, and Web Search - Query Routing With Prompts and Model-Driven Decision Logic - API Calls vs RAG: When You Need Data vs Full Answers - Tool Calling for Weather, Stocks, Databases, and More - Chunking Long Documents Into Searchable Units - Chunk Size Trade-offs for Precision vs Broad Context - Metadata Extraction to Link Related Chunks Together - Semantic Search Using Embeddings for Nearest-Neighbor Retrieval - Image and Multimodal Handling for RAG Pipelines - Text-Based Image Descriptions vs True Image Embeddings - Query Rewriting for Broad, Vague, or Ambiguous Questions - Hybrid Retrieval Using Metadata + Embeddings Together - Rerankers to Push the Correct Chunk to the Top - Vector Databases and How They Index Embeddings at Scale - Term-Based vs Embedding-Based vs Hybrid Search - Multi-Vector RAG and When to Use Multiple Embedding Models - Retrieval Indexes Beyond Vector DBs: APIs, SQL, Search Engines - Generation Stage: Stitching Evidence Into Final Answers - Tool Calling With Multiple Retrieval Sources for Complex Tasks - Synthetic Data for Stress-Testing Retrieval Quality Early - RAG vs Fine-Tuning: When to Retrieve and When to Update the Model - Prompt Patterns for Retrieval-Driven Generation - Evaluating Retrieval: Recall, Relevance, and Chunk Quality - Building End-to-End RAG Systems for Real Applications

lesson

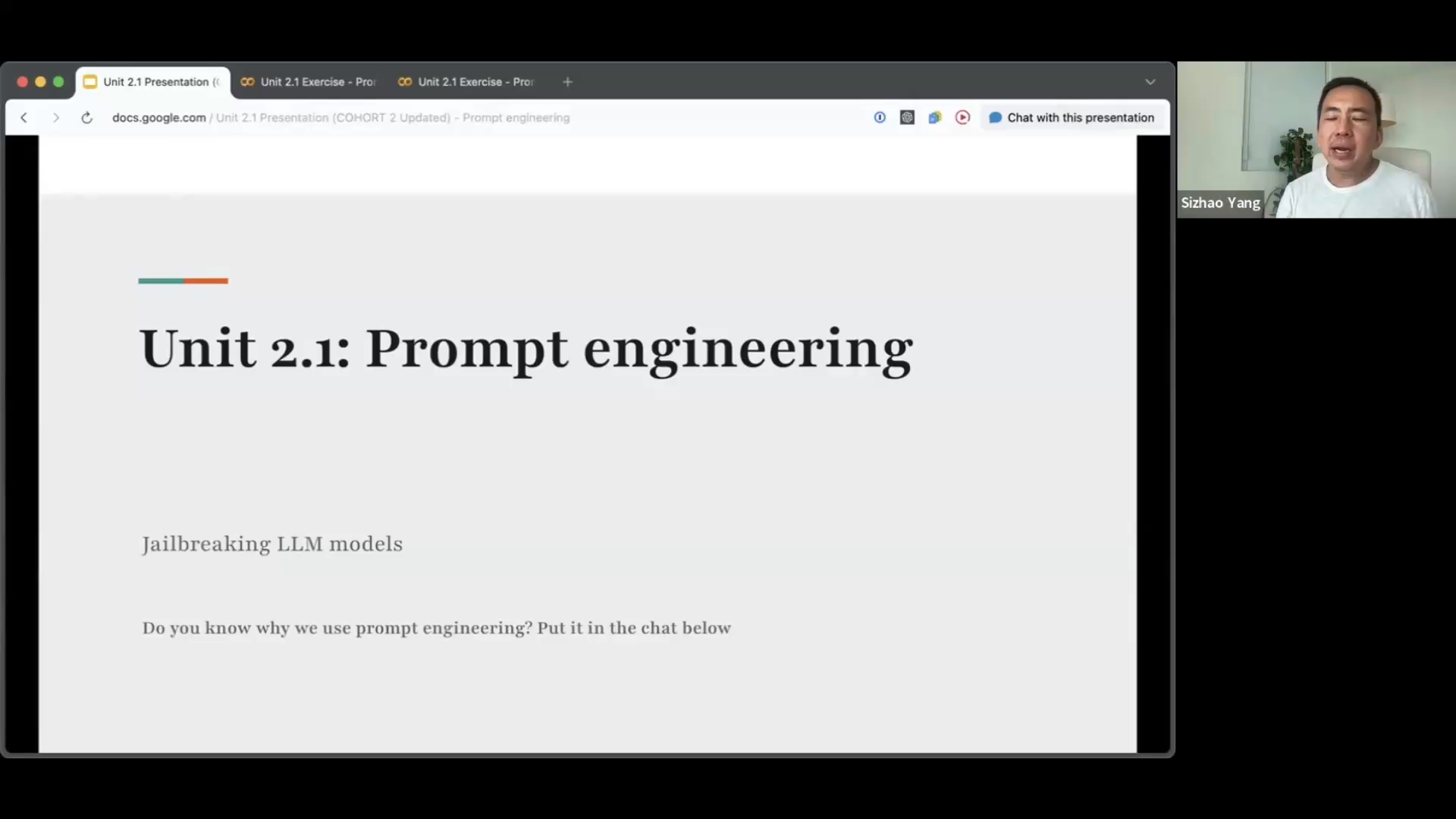

Advanced Prompt EngineeringPower AI course- Intro to Prompt Engineering and Why It Shapes Every LLM Response - How Prompts Steer the Probability Space of an LLM - Context Engineering for Landing in the Right “Galaxy” of Meaning - Normal Prompts vs Engineered Prompts and Why Specificity Wins - Components of a High-Quality Prompt: Instruction, Style, Output Format - Role-Based Prompting for Business, Coding, Marketing, and Analysis Tasks - Few-Shot Examples for Teaching Models How to Behave - Synthetic Data for Scaling Better Prompts and Personalization - Choosing the Right Model Using Model Cards and Targeted Testing - When to Prompt First vs When to Reach for RAG or Fine-Tuning - Zero-Shot, Few-Shot, and Chain-of-Thought Prompting Techniques - PAL and Code-Assisted Prompting for Higher Accuracy - Multi-Prompt Reasoning: Self-Consistency, Prompt Chaining, and Divide-and-Conquer - Tree-of-Thought and Branching Reasoning for Hard Problems - Tool-Assisted Prompting and External Function-Calling - DSPy for Automatic Prompt Optimization With Reward Functions - Understanding LLM Limitations: Hallucinations, Fragile Reasoning, Memory Gaps - Temperature, Randomness, and How to Control Output Stability - Defensive Prompting to Resist Prompt Injection and Attacks - Blocklists, Allowlists, and Instruction Defense for Safer Outputs - Sandwiching and Random Enclosure for Better Security - XML and Structured Tagging for Reliable, Parseable AI Output - Jailbreak Prompts and How Attackers Trick Models - Production-Grade Prompts for Consistency, Stability, and Deployment - LLM-as-Judge for Evaluating Prompt Quality and Safety - Cost Optimization: How Better Prompts Reduce Token Usage

lesson

RAG- Intro to RAG and Why LLMs Need External Knowledge - LLM Limitations and How Retrieval Fixes Hallucinations - How RAG Combines Search + Generation Into One System - Fresh Data Retrieval to Overcome Frozen Training Cutoffs - Context Engineering for Giving LLMs the Right Evidence - Multi-Agent RAG and Routing Queries to the Right Tools - Retrieval Indexes: Vector DBs, APIs, SQL, and Web Search - Query Routing With Prompts and Model-Driven Decision Logic - API Calls vs RAG: When You Need Data vs Full Answers - Tool Calling for Weather, Stocks, Databases, and More - Chunking Long Documents Into Searchable Units - Chunk Size Trade-offs for Precision vs Broad Context - Metadata Extraction to Link Related Chunks Together - Semantic Search Using Embeddings for Nearest-Neighbor Retrieval - Image and Multimodal Handling for RAG Pipelines - Text-Based Image Descriptions vs True Image Embeddings - Query Rewriting for Broad, Vague, or Ambiguous Questions - Hybrid Retrieval Using Metadata + Embeddings Together - Rerankers to Push the Correct Chunk to the Top - Vector Databases and How They Index Embeddings at Scale - Term-Based vs Embedding-Based vs Hybrid Search - Multi-Vector RAG and When to Use Multiple Embedding Models - Retrieval Indexes Beyond Vector DBs: APIs, SQL, Search Engines - Generation Stage: Stitching Evidence Into Final Answers - Tool Calling With Multiple Retrieval Sources for Complex Tasks - Synthetic Data for Stress-Testing Retrieval Quality Early - RAG vs Fine-Tuning: When to Retrieve and When to Update the Model - Prompt Patterns for Retrieval-Driven Generation - Evaluating Retrieval: Recall, Relevance, and Chunk Quality - Building End-to-End RAG Systems for Real Applications

lesson

Prompt Engineering- Intro to Prompt Engineering and Why It Shapes Every LLM Response - How Prompts Steer the Probability Space of an LLM - Context Engineering for Landing in the Right “Galaxy” of Meaning - Normal Prompts vs Engineered Prompts and Why Specificity Wins - Components of a High-Quality Prompt: Instruction, Style, Output Format - Role-Based Prompting for Business, Coding, Marketing, and Analysis Tasks - Few-Shot Examples for Teaching Models How to Behave - Synthetic Data for Scaling Better Prompts and Personalization - Choosing the Right Model Using Model Cards and Targeted Testing - When to Prompt First vs When to Reach for RAG or Fine-Tuning - Zero-Shot, Few-Shot, and Chain-of-Thought Prompting Techniques - PAL and Code-Assisted Prompting for Higher Accuracy - Multi-Prompt Reasoning: Self-Consistency, Prompt Chaining, and Divide-and-Conquer - Tree-of-Thought and Branching Reasoning for Hard Problems - Tool-Assisted Prompting and External Function-Calling - DSPy for Automatic Prompt Optimization With Reward Functions - Understanding LLM Limitations: Hallucinations, Fragile Reasoning, Memory Gaps - Temperature, Randomness, and How to Control Output Stability - Defensive Prompting to Resist Prompt Injection and Attacks - Blocklists, Allowlists, and Instruction Defense for Safer Outputs - Sandwiching and Random Enclosure for Better Security - XML and Structured Tagging for Reliable, Parseable AI Output - Jailbreak Prompts and How Attackers Trick Models - Production-Grade Prompts for Consistency, Stability, and Deployment - LLM-as-Judge for Evaluating Prompt Quality and Safety - Cost Optimization: How Better Prompts Reduce Token Usage

lesson

Synthetic DataPower AI course- Intro to Synthetic Data and Why It Matters in Modern AI - What Synthetic Data Really Is vs Common Misconceptions - How Synthetic Data Fills Gaps When Real Data Is Limited or Unsafe - The Synthetic Data Flywheel: Generate → Evaluate → Iterate - Using Synthetic Data Across Pretraining, Finetuning, and Evaluation - Synthetic Data for RAG: How It Stress-Tests Retrieval Systems - Fine-Tuning with Synthetic Examples to Update Model Behavior - When to Use RAG vs Fine-Tuning for Changing Information - Building RAG Systems Like Lego: LLM + Vector DB + Retrieval - How Vector Databases Reduce Hallucinations and Improve Accuracy - Generating Edge Cases, Adversarial Queries, and Hard Negatives - Control Knobs for Diversity: Intent, Persona, Difficulty, Style - Guardrails and Bias Control Using Prompt Engineering and DPO - Privacy Engineering with Synthetic Data for Safe Testing - Debugging AI Apps Using Synthetic Data Like a Developer Debugs Code - LLM-as-Judge for Fast, Cheap, Scalable Data Quality Checks - Axial Coding: Turning Model Failures Into Actionable Error Clusters - Evaluation-First Loops: The Only Way to Improve Synthetic Data Quality - Components of High-Quality Prompts for Synthetic Data Generation - User Query Generators for Realistic Customer Support Scenarios - Chatbot Response Generators for Complete and Partial Solutions - Error Analysis to Catch Hallucinations, Bias, and Structure Failures - Human + LLM Evaluation: Combining Experts With Automated Judges - Model Cards and Benchmarks for Understanding Model Capabilities

lesson

Neural Network FundamentalsPower AI course- Feedforward networks as transformer core - Linear layers for learned projections - Nonlinear activations enable expressiveness - SwiGLU powering modern FFN blocks - MLPs refine token representations - LayerNorm stabilizes deep training - Dropout prevents co-adaptation overfitting - Skip connections preserve information flow - Positional encoding injects word order - NLL loss guides probability learning - Encoder vs decoder architectures explained - FFNN + attention form transformer blocks

lesson

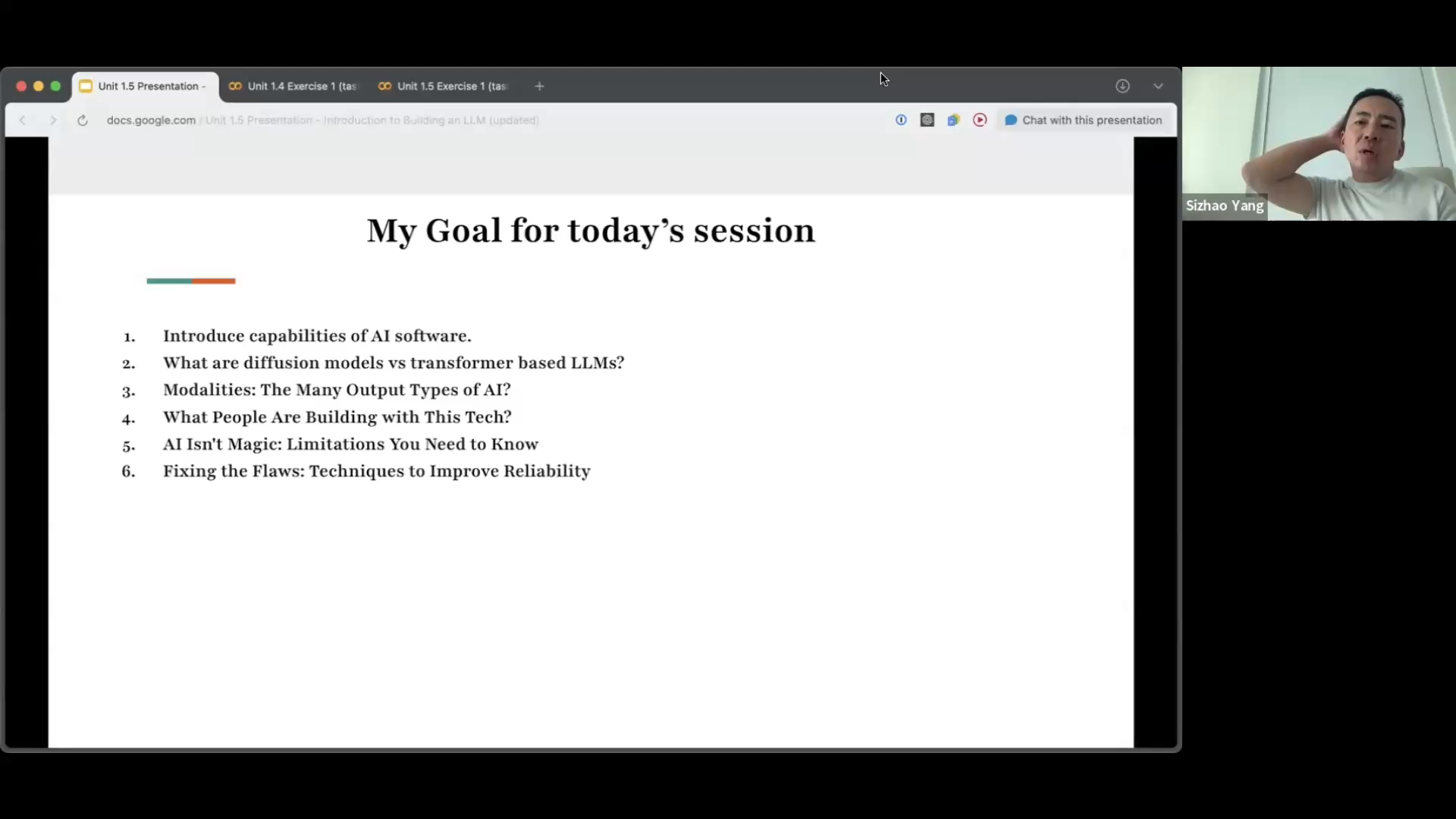

Introduction to Building an LLMPower AI course- Intuition for decoder-only LLMs - Tokens, embeddings, transformer pipeline - Autoregressive next-token generation - Generative AI modalities overview - Diffusion vs transformer model families - Inference flow and prompt processing - Build a real LLM inference API - Architecture: attention, context, decoding - Training phases: pretrain to RLHF - Vertical vs generic LLM design - Distillation, quantization, efficient scaling - Reasoning models: Chain of Thought and Test Time Compute - Hands on Exercises

lesson

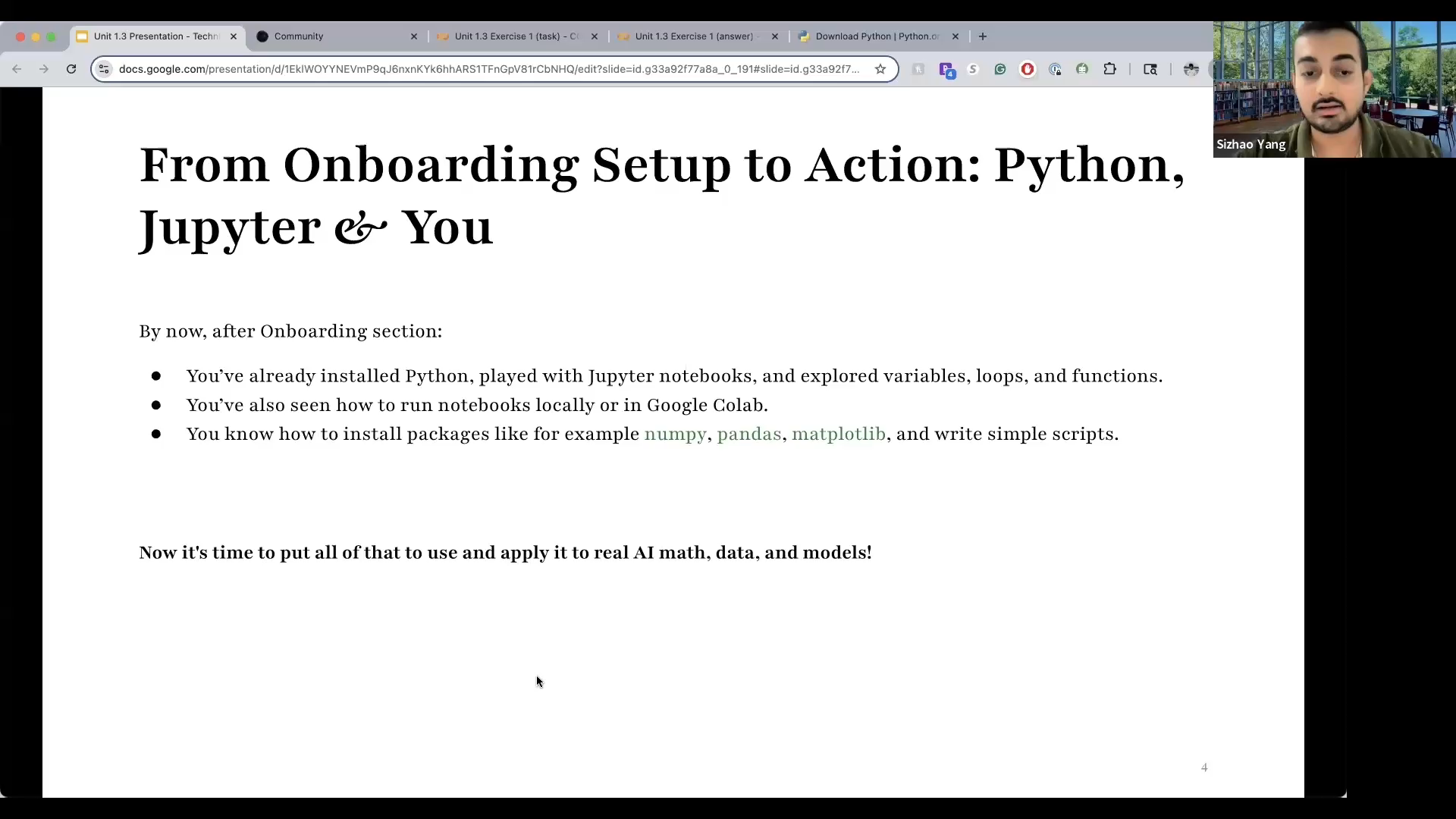

Technical Orientation (Python, Numpy, Probability, Statistics, Tensors)Power AI course- How AI Thinks in Numbers: Dot Products and Matrix Logic - NumPy Power-Tools: The Math Engine Behind Modern AI - Introduction To Machine Learning Libraries - Two and Three Dimensional Arrays - Data as Fuel: Cleaning, Structuring, and Transforming with Pandas - Normalization in Data Processing: Teaching Models to Compare Apples to Apples - Probability Foundations: How Models Reason About the Unknown - The Bell Curve in AI: Detecting Outliers and Anomalies - Evaluating Models Like a Scientist: Bootstrapping, T-Tests, Confidence Intervals - Transformers: The Architecture That Gave AI Its Brain - Diffusion Models: How AI Creates Images, Video, and Sound - Activation Functions: Teaching Models to Make Decisions - Vectors and Tensors: The Language of Deep Learning - GPUs, Cloud, and APIs: How AI Runs in the Real World

lesson

Attention LayerPower AI course- Why context is fundamental in LLMs - Limits of n-grams, RNNs, embeddings - Self-attention solves long-range context - QKV: query–key–value mechanics - Dynamic contextual embeddings per token - Attention weights determine word relevance - Multi-head attention = parallel perspectives - GQA reduces attention compute cost - Mixture-of-experts for specialized attention - Editing and modifying transformer layers - Decoder-only vs encoder–decoder framing - Building context-aware prediction systems

lesson

Multimodal EmbeddingsPower AI course- Foundations of multimodal representation learning - Text, image, audio, video embeddings - Contrastive learning for cross-modal alignment - Shared latent spaces across modalities - Vision encoders and patch tokenization - Transformer encoders for text meaning - Audio preprocessing and spectral features - Time-series tokenization via SAX or VQ - Fusion modules for modality alignment - Cross-attention for integrated reasoning - Zero-shot retrieval and multimodal search - Real-world multimodal applications overview