Traditional vs Reasoning Models

This lesson preview is part of the The Basics of Prompt Engineering course and can be unlocked immediately with a \newline Pro subscription or a single-time purchase. Already have access to this course? Log in here.

Get unlimited access to The Basics of Prompt Engineering, plus 90+ \newline books, guides and courses with the \newline Pro subscription.

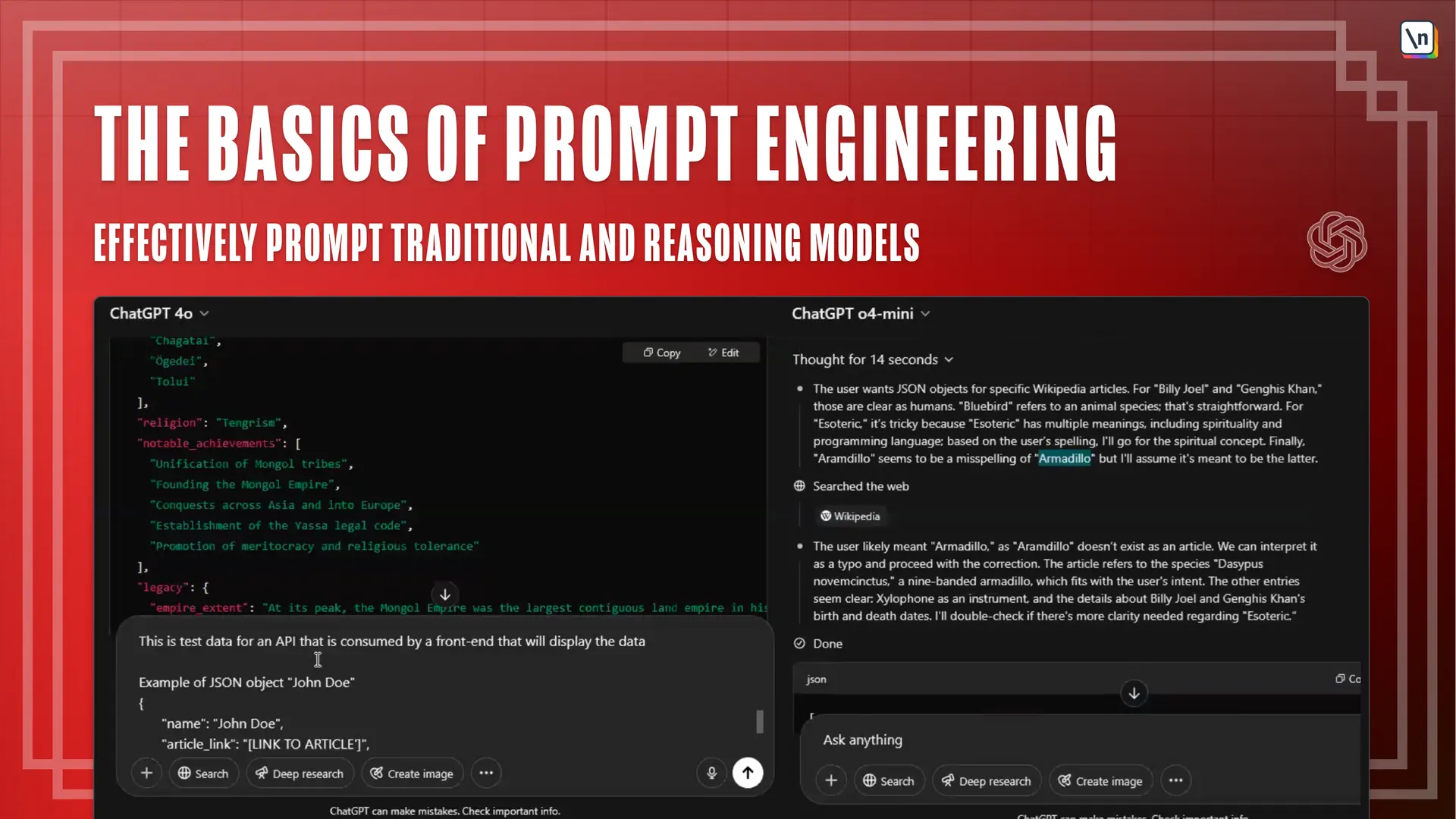

[00:00 - 00:18] So going to traditional versus reasoning models, most mainline LLMs have both as part of their product offering. So you have to use GPT as an example, they have the GPT-4 and all of that line with more traditional models and then up to 3.0 reasoning and such.

[00:19 - 00:25] Most mainline models will have a couple of different options. What makes reasoning models different?

[00:26 - 00:56] And why it pays off the prompt differently is that instead of taking in your input string, doing its black box, mathematics, statistical magic and then giving it back another string, it's instead of just predicting a string of words, it's actually analyzing the prompt you put in, splitting that up into multiple different steps, each of which is its own separate LLM call. It's a little bit more complicated than that underneath the hood.

[00:57 - 01:02] But at a higher level, that's basically what's happening. And this is called chain of thought or COT.

[01:03 - 01:16] And it's in a lot of ways the same technology, right? But it's just a workflow that's meant to imitate a little bit more the way a human does deductive reasoning.

[01:17 - 01:40] And in a lot of cases, this does get you better results, because you have this workflow that has multiple steps, that has the self-correcting, but will parse things in a more methodical manner before taking an action. Obviously, because this is much more computationally expensive and because results are better in a lot of cases, reasoning models tend to be more expensive.

[01:41 - 02:00] So I tend to only use them when I think they'll actually add value for a lot of kind of more simple use cases, traditional models are still the way to go. And obviously, if this is being integrated in some kind of back-end system you have where you're waiting on an API call, using reasoning models can really slow you down.

[02:01 - 02:07] So it really is a matter of picking the right tool for the job, and that is going to be driven by context.