Basics of Prompt Engineering: Introduction

This lesson preview is part of the The Basics of Prompt Engineering course and can be unlocked immediately with a \newline Pro subscription or a single-time purchase. Already have access to this course? Log in here.

Get unlimited access to The Basics of Prompt Engineering, plus 90+ \newline books, guides and courses with the \newline Pro subscription.

[00:00 - 00:09] Okay, so welcome everyone to today's webinar, the basics of Trump Engineering. This is intended to be a very kind of high-level look at Trump Engineering.

[00:10 - 00:17] We're not going to delve into maths, we're not going to be doing any coding. This is really bad, the best practices when prompting LLMs.

[00:18 - 00:23] Why are we doing this webinar? One of the main reasons is we have a lot of other trainings and tutorials going on.

[00:24 - 00:43] Most of those involve using LLM tools or building AI and stuff, but the point is we're doing a lot of stuff around AI tools, how to build stuff for AI, all of that. So this is meant to be beginner-friendly, just introduction to how to write good prompts and get consistent results from your LLMs.

[00:44 - 00:56] So we're going to cover a couple of things here. So some of the key concepts for prompt engineering, some of the key concepts for LLMs really, are at a very high level.

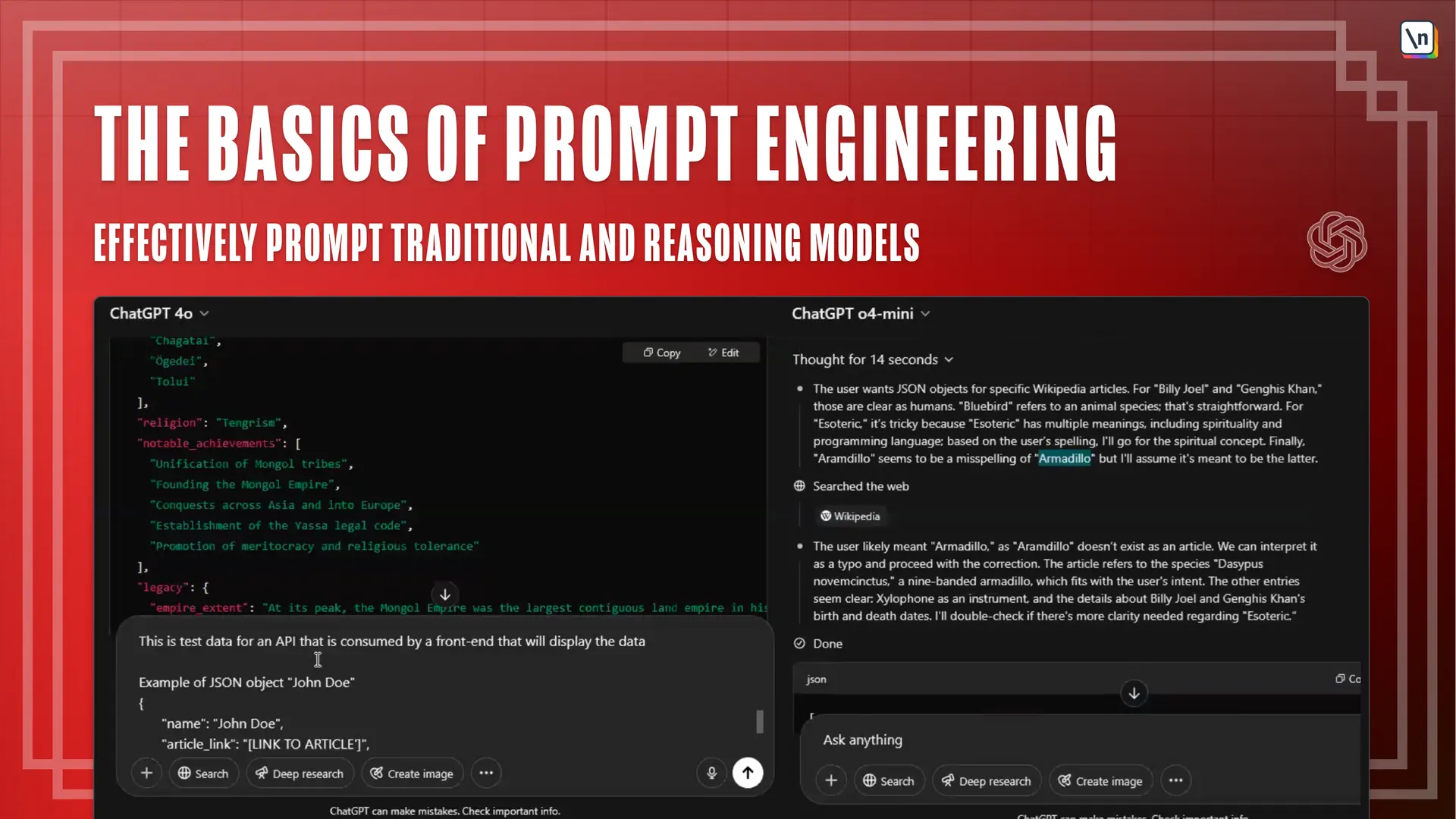

[00:57 - 01:17] So stuff like tokenization, context, windows, all of that. We're not going to delve into more technical stuff like RAG and the kind of maths and statistical models. We are going to talk a little bit about the difference between traditional and reasoning models simply because the way you prompt one versus the way you prompt the other for best results is slightly different.

[01:18 - 01:33] And we are going to cover what those differences are. So again, this is meant to be quite high level and it's meant to be helpful for folks that want to attend our other live trainings with more complicated AI tools.

[01:34 - 01:43] A little bit myself, my name's Nick, I'm from Ireland. I've lived all over the place, but I've spent about 20 years in Ireland and I'm back there now.

[01:44 - 01:56] Recently, as you can see from the picture, I have a long curly hair. This picture is from the Brader-Grace-Thungs Cliffwalk on the eastern coast of Ireland, so about 45 minutes away from where I am sitting now.

[01:57 - 02:02] So my background is in computer science. I sit in University College Dublin.

[02:03 - 02:14] Then instead of a college, I started working as a QA engineer in Oracle for about two or three years. Then after that, kind of keeping within QA, I moved Amazon where I worked on Alexa.

[02:15 - 02:23] I was a QA lead for the launch of Alexa in French. After that, I stayed on Amazon for another few years where I worked as a QA and then later as a technical project manager.

[02:24 - 02:34] And then after that, I moved to the Balkans for a while. My wife is from there, so I lived in the Balkans for a while instead of my own freelance consulting business.

[02:35 - 02:43] And now I am back in Ireland. There's a lot of use cases for LLMs.

[02:44 - 03:09] I think no matter what domain you're in, even if you're in a less technical domain, just with the amount of capital investments that's going into large language models and gen AI in general, workflows pretty much everywhere are starting to incorporate them. Even if you're skeptical about the technology, there's probably some places in your professional life where you're going to have a difficult time avoiding using them.

[03:10 - 03:19] I used them probably less than some of my colleagues to be fair, but I've used them to generate test data, for example, for data mocking.